How will today’s near-perfect machine translation and AI text generation impact disinformation detection on social media?

Comparing NLP architectures on their sensitivity to machine translation induced noise for automated disinformation detection.

A 6 by 7 experimental design of feature extraction methods and

classification algorithms

Project Summary

The spread of disinformation through social media has become a major concern in recent years. Disinformation can have serious consequences, from shaping public opinions, causing real-world harm to undermining democratic processes. A new development, is the availability of free, high quality and real-time language translation that is quickly becoming ubiquitous in the the online and social media landscape. This study investigated the impact of Machine Translation (MT) on NLP architectures’ disinformation detection performance by comparing AUC ROC scores at increasing levels of MT noise. Six of the most commonly used feature extraction methods (CountVectorizer, HashingVectorizer, TF-IDF, GloVe, Word2Vec and FastText), and seven common classification algorithms (Logistic Regression, Naive Bayes, Support Vector Machines, Random Forest, K-Nearest Neighbours, Gradient Boosting and Extreme Gradient Boosting) were used to measure performance decrease as MT noise increased. These 42 architectures were compared on four English language test sets differing only in incrementing levels of MT induced noise. This study found that MT significantly lowers performance of disinformation detection NLP architectures across the board. Furthermore it found that certain feature extraction methods and classification algorithms were significantly more strongly impacted than others. These findings are highly valuable in the context of disinformation spread on social media platforms, as the findings suggest that the current trend in machine translation services and more recently the explosion in LLM use, is likely to exacerbate the disinformation problem by slipping through NLP based detection methods at higher rates.

Introduction

Social media platforms have revolutionized the way information is consumed and spread around the world (Centola, 2010; Kaplan, 2015). Through platforms such as Facebook, Twitter, Instagram and more recently TikTok, a plethora of news and information is now more easily accessible than ever before. However, with the rise of social media, there has also been an increase in the spread of disinformation.

As captured fittingly in Lazer at al., 2018 p. 1094 “Social media platforms have enabled the rapid spread of information, both true and false, across wide networks of individuals“. In particular the spread of disinformation has become a major concern in recent years. Disinformation can have serious consequences, from shaping public opinions to undermining democratic processes (Guess & Lyon, 2020; Lewandoswky et al., 2012; Tucker et al., 2018).

Disinformation, misinformation and ‘fake news’ are all forms of false or misleading information that through social media platforms spread more rapidly than ever before. These terms are not strictly and unilaterally defined and as such the terms ‘misinformation’ as well ‘disinformation’ are commonly used either as an umbrella term to capture information that is false or inaccurate, regardless of intention to deceive (Lewandosky et al., 2012; Tandoc et al., 2018; Tucker et al., 2018). In other works a more narrow definition is used, in which the term misinformation is used only for unintentional false information, while ‘disinformation’ is used for intentionally false information that aims to influence the public (Tandoc, 2019; Wardle, 2017).

- ’Disinformation’ is a form of false information that is deliberately spread with the intention of misleading or manipulating the public. Disinformation often involves the use of propaganda, false narratives, and other tactics designed to deceive and manipulate people. Disinformation campaigns can be orchestrated by governments, political groups, or other organizations seeking to achieve specific goals, such as undermining political opponents or destabilising foreign governments.

- ’Fake news’ commonly refers to intentionally fabricated or misleading news stories that are spread to misinform or manipulate the public. This type of false information often aims to generate clicks, views, or engagement for financial or political gain. Fake news can be created and distributed by individuals, organizations, or even foreign entities seeking to influence public opinion.

- ‘Propaganda’, though similar to disinformation and fake news, can consist of factually correct information, but packaged in a way so as to disparage opposing viewpoints, the point being not so much to present information as it is to rally public support (Born et al., 2017). An informal graphic that illustrates this categorization based on these two dimensions can be found in appendix A.

This study, while primarily focused on intentional disinformation, such as fake news, propaganda and political disinformation, does not exclude other forms of misinformation most likely shared without misleading or (politically) manipulative intent in order to maintain a focus more representative of the real world variety and broad spectrum that disinformation on social media holds (1).

Despite efforts by social media companies to address and combat harmful content including certain forms of disinformation, its spread seems to continue through these platforms. The challenges faced in combatting disinformation are not limited to social media platforms and are often underestimated for multiple reasons, some of which are briefly mentioned here.

- Disinformation can be difficult to distinguish from legitimate information, especially if it appears to come from a credible source or is presented in a convincing way. A challenge that is compounded by the fact that what may be considered true or false today might not have been perceived that way in the past.

- Disinformation can become deeply ingrained in people’s beliefs and attitudes, making it difficult to correct even with accurate information (e.g. in the form of clarifying banners attached to posts).

- Social media algorithms commonly tend to prioritize engagement (as a way to provide content that is of interest to users more than content that is not) which results in sensational or controversial posts, such as disinformation, being more likely to get promoted.

- Content including disinformation on social media can spread extremely rapidly and can be shared simultaneously by a large number of users, this can make it difficult to isolate and contain. On top of this social media platforms have limited resources to police content, relying in part on users to report disinformation, which in turn can be faulty or biased and require resources to be validated prior to taking action. Also user reporting can easily become a form of online vigilantism, spreading false rumors and malicious content (Shu et al., 2019, p. 26).

- Even if disinformation is correctly identified and removed, it can continue to circulate on other platforms or through private or end to end encrypted messaging. After which it might, in altered form, language and/or medium, find its way back on a platform.

A more recent development in the field of natural language processing (NLP) and ‘artificial intelligence’ (AI) that indirectly exacerbates the last point above, is the rise of ‘transformers’ and transformer-based models. Transformers are a type of neural network architecture that has revolutionized NLP. Introduced in a 2017 research paper called ‘Attention is all you need’ by Vaswani et al., the transformer architecture uses a self-attention mechanisms to process sequential data such as text. This allows the model to focus on different parts of the input text and capture long-range dependencies, making it particularly powerful for tasks such as language modelling and machine translation (MT). On top of this it helped enable parallel processing which allowed for enormous increases in dataset sizes for training. Well-known examples of transformer-based models and applications are ‘Google translate’ and more recently, the ‘large language models’ (LLM), which has colloquially become the collective term for these transformer-based models and applications trained with enormous (textual) datasets, such ‘(chat)GPT’ (openAI), and competing services/models such as ‘Bard’ (google), ‘Alpaca’ (Stanford University) and Llama (Meta).

Whether it is the Google translate service in its many freely available forms, other transformer-based translation services built into social media platforms themselves, or translation through a LLM, language barriers are becoming virtually non-existent on social media. While this may be great for helping interaction and information spread across languages, machine translations are not always accurate, and can sometimes produce translations that are biased (KhudaBukhsh et al., 2021). In addition, the use of machine translation services can sometimes result in miscommunication, particularly when it comes to subtle nuances of language (Vieira et al., 2021). On top of this, the recent rise of LLM’s moves beyond the narrow scope of translation, with e.g. chatGPT’s text-generation capabilities as primary example, only suggest a larger role for transformer-based generated or translated content on the spread of disinformation through social media in the immediate future (3).

Interestingly, techniques from this same field of AI and NLP, are often hailed as the only truly scalable method for combatting disinformation on social media platforms. However, success here is not guaranteed and technical complexities further complicate the challenges outlined above. A more detailed list of technical challenges associated with such an approach, taken from de Oliveira et al., 2021, can be found in Appendix B. While at this point it cannot be stated with certainty when, if, or with what accuracy this will be able to solve the disinformation problem, it can be said that this seems to be the method social media platforms are increasing their reliance on (4). Which, unless otherwise incentivized e.g. by new government regulation (5), means these NLP based detection methods are becoming the de facto method primarily relied upon to shield social media’s four billion daily active users from disinformation.

This increasing reliance on NLP based detection as a primary mean of combatting the spread of disinformation through social media, combined with the newfound ubiquity of real-time translation services make it crucial to understand if, and to what extent MT induced noise impacts common NLP architectures.

This study tested the hypothesis that machine translations impact the ability to detect disinformation when relying on existing NLP architectures for automated disinformation detection.

It also tested two secondary hypotheses.

- Firstly, that (some of) the feature extraction methods differ in the overall NLP architecture’s sensitivity to the MT induced noise when correcting for the classification algorithm.

- And secondly, that (some of) the most commonly used general purpose classification algorithms differ in the overall NLP architecture’s sensitivity to the MT induced noise when correcting for the feature extraction method.

Methodology

Summary of procedure

The impact of MT on detection performance is assessed by isolating the effect of translation from language-training effects by measuring disinformation detection performance with increasing levels of MT translation, while doing so in English language only.

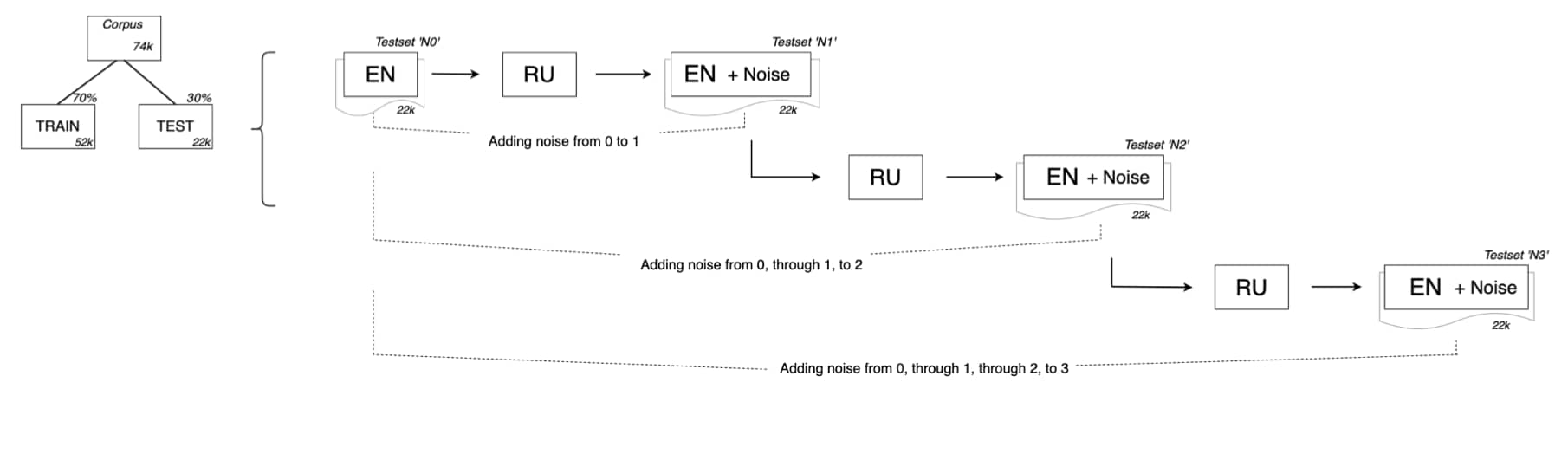

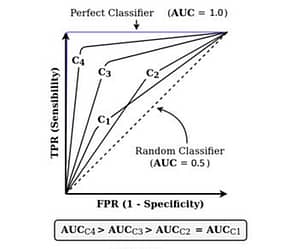

This is operationalized by using increasing levels of back-translation on a single test set (a 30% split from the original corpus), as can bee seen in figure 1. This allows for a misinformation detection architecture to make predictions on several versions of the unseen test sets. Each version of the test set contains the same source texts, but with different levels of MT (and with that the MT induced noise). The predictions on each version of the test set were evaluated separately using AUC ROC scores. This approach resulted in 42 different architectures (with each four AUC ROC scores) to be compared. The motivation for this extensive approach was twofold in that it aims to allows for a safer generalization with regards to the primary research question of overall impact of MT on misinformation detection, and secondly it aims to allow for a better separation of the effects of feature extraction methods from those of the classification algorithms when it comes to text classification in the field of disinformation detection.

Data set

While the focus in terms of subtypes of disinformation for this study was political disinformation, the dataset was formed to more realistically reflect the variety of misinformation present on social media. This was realized by compiling five existing datasets. The largest proportion of misinformation in this compiled dataset can be categorized as political disinformation articles that were collected from websites flagged by Politifact (6) as disinformation and compiled in the ‘ISOT (7) fake news’ dataset.

A second large proportion consists of a mix of pro-Kremlin propaganda and pro-Kremlin disinformation in web article form collected by the EUvsDisinfo (8) initiative. A third significant proportion consist of a mix of misinformation and disinformation in the form of tweets (i.e. posts on the twitter platform) added to increase representativity by its different short text form, often less professional writing styles and potentially different nature in terms of intent. The tweets are selected either as flagged as containing falsehoods by fact-checking services such as Politifact and Snopes (9), or as tweets that were rebuking information shared by the World Health Organization, the US Center for Disease Control or and the UK National Health Service. To create a balanced dataset, an equal amount of ‘true’ text were included. These include a mix of sources and formats (ranging from professional articles to twitter texts) with a large proportion made up of web scraped articles from Reuters publications.

Altogether this compiled dataset consists of just under 74k texts (10).

Inducing MT noise through back-translation

From the dataset a 30% split was taken (using the train test split function, with a fixed random seed, from the scikit-learn package) resulting in a test set of just over 22k texts. This set (referred to as ‘test set N0’) was translated to a different language (i.e. Russian) and then from Russian back to English. Providing an altered copy (’test set N1’) of the original test set, which merely differed in its MT induced noise being translated twice. This process was repeated another two times resulting in test set N2 (having undergone another back-translation from N1, or two from the perspective of N0), and test set N3 having undergone three back-translations from the original test set. This process is schematically represented in figure 1.

Figure 1. A schematic representation of the data manipulation process depicting the steps from corpus to original test set to the three test sets with varying levels of MT induced noise.

For this process to closely represent real world use present on social media platforms, the same service and version that is most widely used today, Google translate (the free version that is implemented in google’s web interface at e.g. https://translate.google.com), was used for MT. This meant the ‘google trans 3.0.0’ API (11) that leverages the Google Translate AJAX API was preferred over the (paid) ‘cloud translate V2’ packages that google also provides for larger scale translations.

However, this API has several limitations both on the amount of character that a to be translated text can have, as well as the amount of text that can be processed in a single sequence. The first limitation was accepted by limiting (cutting off) all text after 2800 characters (12). Here it was checked that this did not impact either class disproportionately, as this might give an artificial cue for a classification algorithm to pick up on. The second limitation was circumvented by using a batching method. Since multiple translations (2 for each back-translation) were required without running into memory or API time-out problems, a custom ‘batching’ script was created. This scripts used the split function to separate the test set into 50 dataframes of 6k texts each, which were back-translated forming new columns ‘testset_N1’, ‘testset_N2’, and ‘testset_N3’, and exported to google drive storage one by one. Python’s dictionary datatype was used to store and combine these 50 dataframes, now four columns wide, back into a single dataframe consisting of the four test sets that were used throughout this study.

Lexical Distance as proxy for MT induced noise

Aside from ordinal back-translation levels (taking the role of the independent variable in this study), a proxy to estimate the MT induced noise on a more continuous level was added. For this the edit-based Jaro-Winkler distance was used. The Jaro-Winkler distance is a string similarity metric that is often used in natural language processing to compare two strings and estimate their lexical distance. It can be viewed as an expansion to the Jaro similarity, which measures the similarity between two strings in terms of the number of matching characters and the number of transpositions needed to convert one string into the other.

The Jaro similarity sim_{j} of two given strings s_1 and s_2 is calculated using the following equation:

Here |s_i| is the length of the string s_i , m is the number of matching characters and t is the number of transpositions.

The score is 0 if the strings have zero matches, and 1 if they are a perfect match. Whether a character is considered ‘matching’ is based on whether they are the same character within a certain range, and that range is a function of the length of the longest of the two strings:

The Jaro-Winkler similarity algorithm expands on this by adding a scaling factor that gives more weight to the similarity of the initial characters of the strings (Winkler, 1990). This is based on the observation that similar words often have similar beginnings, and the scaling factor helps to capture this similarity more effectively. This is done as follows:

{\displaystyle sim_{jw}=sim_{j}+\ell p(1-sim_{j}),}Where {\displaystyle sim_{jw}} is the Jaro-Winkler similarity, l is the length of the common prefix of the string, and p is a constant scaling factor (13). From this the distance is simply the inverse of the similarity score ({\displaystyle dist_{jw}=1-sim_{jw}} ).

The Jaro-Winkler distance has the advantage of computational efficiency over certain other lexical similarity metrics such as Levenshtein distance (Wang et al., 2017). Which was beneficial in this study given the combined size of the three test sets on which this was implemented.

NLP architectures

This study looks at 42 NLP classification architectures. These 42 architectures consist of the combinations of six commonly used feature extraction methods and seven commonly used general purpose classification algorithms (as listed in table 1).

Table 1. An overview of the Feature Extraction Methods and General Purpose Classification Algorithms used in this study.

Feature Extraction Methods

Feature extraction methods, also know as vectorization techniques (or ‘embeddings’ in the case of GloVe, Word2Vec and FastText), form a fundamental process throughout all NLP. Feature extraction is the process by which raw text (14) is transformed into a numerical representation (commonly in the form of a vector space model) which is machine-readable and can be processed using various algorithms for NLP tasks such as classification.

In this study CountVectorizer, HashingVectorizer, TF-IDF, GloVe, Word2Vec and FastText were used.

CountVectorizer

CountVectorizer is a bag-of-words technique that represents texts as a matrix of word counts. Each row represents a document and each column represents a unique word in the entire corpus. The values in the matrix indicate the frequency of each word in each document. This process involves several steps. First, it tokenizes the text into individual words and removes any stop words, then, a vocabulary of all the unique words in the corpus is built. Finally, it counts the frequency of each word in each document and creates the matrix of word counts. Mathematically, the vectors in the matrix of this representation are expressed as: V_D = [\:w_1,\: w_2,\: w_3,...,\: w_{n-1},\: w_n\:]

where V_D is the weight vector w for each sentence in the document D up to the n’th term. For the implementation in this study the scikit-learn package was used. It is important to note is that this bag-of-words technique does not capture any meaning or context of the words, it simply counts the occurrence of each word in each document.

HashingVectorizer

HashingVectorizer is a feature extraction method that shares its bag-of-words approach with CountVectorizer, except instead of creating a vocabulary of all the unique words in the corpus, HashingVectorizer uses a hashing function to map words to a fixed-length feature space. A hashing function takes each word in the text and maps it to a unique index in the feature space. This index is then used to increment the count of that index in the feature vector. The resulting vector represents the frequency of each word in the document. An advantage of HashingVectorizer is that it does not need to store the entire vocabulary in memory, which makes it more memory-efficient than CountVectorizer, especially when dealing with large datasets such as in this study or larger. However, because the hashing function is not reversible, it is not possible to determine which words correspond to which indices in the feature space. This means this extraction technique is difficult to use for anything that requires interpretation of the created features. This study implemented HashingVectorizer using the scikit-learn package as the second of the six implemented feature extraction methods.

TF-IDF

CountVectorizer and the HashingVectorizer techniques share a significant shortcoming. They presume an equality in terms of importance of all individual terms in the corpus. Such an assumption can be problematic since terms with high occurrence in a single document can eventually be overestimated in an evaluation based simply on the total sum of each term in the corpus (Manning, 2009). TF-IDF aims to resolve this by measuring the degree of semantic relevance of a document term in relation to the entire collection. An insightful explanation can be found in Oliveira et al (2021), which is briefly summarized as follows. TF-IDF computes the value of each word in a document using the equation:

TFIDF = tf_{t,d} \cdot idf_tThe representation for each word is the product of two statistical measures, the term frequency and the inverse document frequency. The first factor of this multiplication, tf_{t,d} , is calculated by dividing the number of occurrences, n_{t,d} , of a term t in the document d , by the total number of terms in document d :

tf_{t,d} = \frac{n_{t,d}}{\Sigma_{w\in d} \:n_{w,d}}

The second factor, idf_t , refers to how often that term $t$ is mentioned in the other documents,

Here N is defined as the number of documents in the corpora. The idea behind this is that words that are common across all documents in the corpus are less informative for a specific document than words that appear in only a few documents. This way the TF-IDF value for a word increases proportionally as the number of times it appears in the document (TF) increases, and decreases inversely proportional to the number of documents in the corpus that contain that word (IDF). This results in a strong correlation between the ‘informative relevance’ of a word and its weight in the vector space, which is greatly beneficial for TF-IDF as feature extraction method. However, this logically falls down in the case of synonyms, as they are not counted among their same-meaning word. TF-IDF was implemented using scikit-learn as the third of six feature extraction methods.

Word2Vec

Both the bag-of-words techniques (CountVectorizer and HashingVectorizer) and the TF-IDF technique treat words as completely independent entities that so not have any semantic connection with each other. Which is not true in natural language. Aside from the synonym problem in TF-IDF, the use of homonyms such as ‘bank’, which in a sentence would derive their meaning from the words surrounding them, can serve as an example of the importance of context in natural language. The following three feature extraction methods discussed here, starting with Word2Vec, are often referred to as ‘word embeddings’ and they aim to solve this context blind spot. Word embedding techniques were popularized largely due to the success of the Word2Vec model developed at Google by researchers Mikolov, Chen, Corrado, and Dean (Mikolov et al., 2013). Word2Vec is a neural network trained word embedding model that computes the vector representation of words using two possible architectures, Continuous Bag-of-Words (CBOW) and Skip-gram (15), as seen in figure 2.

Figure 2. ‘The CBOW architecture predicts the current word based on the context, and the Skip-gram predicts surrounding words given the current word’, image taken from the Mikolov et al., 2013 paper introducing the word2vec model.

Both methods divide the texts into two groups, target word, and context. The ‘context’ is operationalized as (a limited set of) the words that surround the target word. The CBOW architecture aims to predict the target word from the surrounding context words, whereas the Skip-gram model aims to predict the surrounding context words given the target word (16) .

In either architecture, Word2Vec learns a dense vector representation of each word based on its co-occurrence with other words in the corpus. The vector representations are such that words with similar meanings and usage patterns are located close to each other in the vector space. This way unlike the previously described feature extraction methods, Word2Vec aims to capture the semantic and syntactic relationships between words in their vector representations. For this study the CBOW architecture based embedding was chosen as it was described as faster and better suited for large datasets in the original paper, however it should be noted that later studies suggest that performance-wise the skip-gram architecture might have an edge over the CBOW architecture in terms of sentence-based classification (Lai et al., 2016). In the current study the pretrained 300 dimensional word2Vec (CBOW) embeddings were reduced to 50 dimensions using principal component analysis (PCA), and as such were used as the fourth of six feature extraction methods (17).

GloVe

GloVe, derived from ‘Global Vectors for word representation’, is an unsupervised learning algorithm for generating word embeddings developed by Pennington et al. at Stanford University in 2014. GloVe, just like Word2Vec, aims to capture the semantic relationships between words, but it does so in a different way. While Word2Vec is based on a neural network, GloVe uses a co-occurrence matrix to generate word embeddings. This matrix represents the frequency with which each word appears in the context of every other word in the corpus. The co-occurrence matrix is then used to calculate the probability of co-occurrence between each pair of words. GloVe’s core idea is that the ratio of co-occurrence probabilities of two words should be equal to the ratio of their probabilities of being used in a particular context. This is called the “ratio objective” and is formulated as a minimization problem. Specifically, GloVe aims to minimize this cost function:

J = \sum_{i=1}^{V} \sum_{j=1}^{V} f(X_{ij}) (w_i^T w_j + b_i + b_j - \log X_{ij})^2

,

where X_{ij} (as an element of the word co-occurrence matrix X) represents how often word i appears in context of word j, w_i and w_j are the word vectors for words i (main) and j (context) with b_i and b_j as their corresponding scalar biases. Here f is a weighting function which helps prevent GloVe from learning only from extremely common word pairs (more details on the default weighting function can be found in the original GloVe paper (Pennington et al., 2014), or in summarized form from the cran-r project text2vec documentation (18)).

The optimization problem is solved using stochastic gradient descent (SGD), which updates the word vectors iteratively in the direction of the negative gradient to minimize the cost function. One of the key advantages of GloVe is that it can be trained efficiently on large corpora, since the co-occurrence matrix can be precomputed and stored in memory. The pretrained 50 dimensional GloVe embeddings were used as the fifth of six feature extraction methods used in this study.

FastText

The third and final embedding technique used in this study was FastText. FastText is another unsupervised learning algorithm for generating word embeddings. It was developed between in 2017 by Mikolov (see also ‘Word2Vec’) but this time with colleagues at Facebook’s AI research department (19). FastText can be seen as a variant of Word2Vec that extends its approach by incorporating subword information into the embeddings. FastText does this by representing each word as a bag of character n-grams (subsequences) that appear in a word.

The main advantage of FastText over Word2Vec is that it can handle out-of-vocabulary words better. This is because the character n-grams capture subword information that can still be used to represent unseen words. This is better understood using an example. If the word “Trump” is in the training data, but the word “Trumpism” is not, FastText can still generate an embedding for “Trumpism” by combining the embeddings of the character n-grams “Trump” and “ism“.

To realize this, FastText uses a neural network similar to Word2Vec. The key difference is that instead of learning embeddings for complete words, FastText learns embeddings for each character n-gram in the word, and then aggregates these embeddings to obtain the final word embedding. Specifically, the word embedding is calculated as the sum of the embeddings of all the character n-grams in the word, followed by a normalization step. Just like Word2Vec, FastText has two training architectures (CBOW and skip-gram). In CBOW mode, the model tries to predict the middle word given its surrounding words (context), while in skip-gram mode, the model tries to predict the surrounding words given the middle word.

Perhaps the main disadvantage of FastText is that it tends to generate very large embeddings, especially when using longer character n-grams. This can be mitigated by reducing the size of the embedding vectors, but this may also reduce the quality of the embeddings. However, its ability to handle out-of-vocabulary words makes it particularly useful in settings where the vocabulary is large and constantly evolving, such as in social media or news. For this study the pretrained 300 dimensional FastText embeddings were reduced to 50 dimensions using PCA (as was done for the Word2Vec embeddings), and as such were used as the last of the six feature extraction methods included in this study.

Classification Algorithms

General-purpose classification algorithms are machine learning algorithms that are used to classify data into categories or classes based on their features or attributes. In this study Logistic Regression, Naive Bayes, Support Vector Machines, Random Forest, K-Nearest Neighbours, Gradient Boosting and Extreme Gradient Boosting were implemented. Each algorithm is briefly described on merely its broadest characteristics. Mathematical representations were included where deemed helpful in understanding the broad differences between the algorithms, but a detailed comprehension of their inner workings is not required in order to understand this study. In each of these mathematical representations, a set of input features X = [\: x_1, \: x_2, x_3,..., \: x_{n-1}, \: x_n \: ] and the to be predicted classes as a binary response variable Y \in \{0,1\} are assumed, unless otherwise specified. For each model their most common techniques against overfitting are briefly mentioned. In this study heavy use of such techniques was avoided to prevent potential confounding effects from difference in tuning across models, unless performance on the train set strongly outperformed performance on the test set.

Logistic regression

Logistic regression is a linear classification algorithm that models the probability of an input data point belonging to a particular class. Mathematically the logistic regression model can be understood with the following equation for the conditional class probability given X:

P(Y=1 | X) = \frac{exp(\beta_0 + \beta_1 x_1 + \beta_2 x_2 + \dots + \beta_n x_n)}{1 + exp(\beta_0 + \beta_1 x_1 + \beta_2 x_2 + \dots + \beta_n x_n)}where \beta_0, \beta_1, \beta_2, ..., \beta_n are the model parameters (the coefficients in regression terms) to be learned from the training data. The logistic function maps the linear combination of the input features to a probability value between 0 and 1. The model is most commonly trained using maximum likelihood estimation, which aims to maximize the log-likelihood of the observed data given the model parameters. This can be done most efficiently using iterative optimization algorithms such as gradient descent. Logistic regression can handle both categorical and continuous features, and can be regularized to decrease overfitting using L1 or L2 penalty terms (20).

Naive Bayes

Naive Bayes is a probabilistic classification algorithm that is based on Bayes’ theorem

P(A|B) = \frac{P(B|A)\: \cdot \: P(A)}{P(B)}

with an assumption of independence for the input features requiring them to be conditionally independent given the class label. This means that the value of one feature does not affect the probability of the value of another feature. The classification algorithm works by calculating the conditional probability of each class given the input features and choosing the class with the highest probability. The Naive Bayes algorithm calculates the posterior probability of each class through

P(Y=y|X) = \frac{P(X|Y=y) \: \cdot \: P(Y=y)}{P(X)}

where the part of P(X|Y=y) is the likelihood function that models the probability distribution of the input features given the class label, and P(Y=y) is the prior probability of the class, and P(X) is the ‘evidence factor’ that normalizes the probabilities (this means it ensures that the sum of the probabilities for all possible classes equals to one). Its conditional independence assumption allows the likelihood function to be factorized as a product of the marginal probability distributions of each feature. While generally not considered prone to severe overfitting, the Naive Bayes algorithm can, instead of L1 or L2 regularization, use the ‘additive smoothing’ parameter (or Laplace smoothing) which is a hyper-parameter that helps avoid probabilities of zero. It does this by adding a small value to the count of each feature for each class to provide a small amount of evidence for each feature, even if it does not appear in the training examples for that class.

Support Vector Machines (SVM)

SVM’s, developed at AT&T Bell Laboratories by Vapnik and colleagues, is a powerful classification algorithm that aims to find the hyperplane that maximally separates the classes in the feature space in terms of distance (Cortes & Vapnik, 1995). This hyperplane is drawn by a subset of samples, called the ‘support vectors’. Simply put an optimal separation is found by a kernel function that minimizes the error function. Mathematically, given the input feature vector X and a binary response variable as Y \in \{-1,1\} the SVM algorithm (21) seeks to minimize the distance in the form of

{\displaystyle \left[{\frac {1}{n}}\sum _{i=1}^{n}\max \left(0,1-y_{i}( {W} ^{T}{X} _{i}-b)\right)\right]+\lambda \| {W} \|^{2}}

where W and b are the model’s parameters to be learned from the training data.

The hyperplane is defined by the decision function (W^T X_i + b), where X is the input feature vector. The SVM algorithm can be extended to handle non-linearly separable data by using kernel functions, which can map the input features to a higher-dimensional space where linear separation is possible. Popular kernel functions include the radial basis function (RBF), polynomial, and sigmoid kernels. Similar to the logistic regression algorithm, both L1 or L2 regularization can be used to prevent overfitting.

While SVM’s can be computationally expensive and may not perform well on imbalanced data or when the decision boundary is not well-defined, they are known for their ability to handle high-dimensional data and their robustness to noise and outliers. They have been extensively tested and compared and are confirmed to yield good results, especially for classification (Meyer et al., 2003).

Random Forest

Random forest is an ensemble learning method that combines multiple decision trees to improve the accuracy and robustness of the classification. Single decision trees are known to have potential disadvantages such as the ‘dimensionality curse’ (22) and an oversensitivity to noise (Rokach & Maimon, 2005). However, a random forest can be seen as a collection of decision trees, where each tree is trained on a random sample of the training data and a random subset of the input features being selected K times. The trees are trained with the same information, so that the results are formed by the individual class predictions of each tree, which are then aggregated by majority (or weighted) voting.

While the individual trees can be regularized to prevent overfitting, the randomization in the ensemble already helps reduce overfitting and increases the diversity of the trees, making the algorithm more robust to noise and outliers. Random forests can handle both categorical and continuous features, and unlike SVM’s, they can also be used for feature selection and importance ranking (Liu et al, 2012).

K-Nearest Neighbours (kNN)

KNN is a non-parametric classification algorithm that classifies input data points based on the class labels of their nearest neighbours in the feature space. The kNN algorithm assigns a class label by finding the k closest data points in the training set and taking the majority vote of their class labels. The value of k is a hyper-parameter that controls the bias-variance trade-off of the algorithm, where larger values of k lead to smoother decision boundaries and lower variance but higher bias. While smaller values of k lead to more complex decision boundaries and higher variance tend to result in lower bias, which makes k the default parameter to use to prevent overfitting. The distance metric between the input data points can be Euclidean distance (the scikit-learn default and the distance metric used in this study), Manhattan, Minkoswki, or any other suitable distance metric.

A potential shortcomings of the default distance function when there are many irrelevant features can result in a dimensionality curse problem (Jiang et al., 2007). While the kNN algorithm can handle both categorical and continuous features, and can be used with various distance metrics and weighting schemes, it is what is called a ’lazy learning’ algorithm, which means it requires storing training data at the training stage and delaying its learning until classification, which can be a practical disadvantage for large datasets.

Gradient Boosting (GB)

Gradient Boosting, like random forest, is a type of ensemble learning method but Gradient Boosting combines multiple weak classifiers (typically decision trees) to from a stronger classifier. It works by iteratively adding new models that are trained on the errors of the previous classifiers. Mathematically the Gradient Boosting algorithm

\hat y(X) = \sum_{m=1}^{M}\hat y_m(X),

with M as the number of trees, \hat y_m(X) as the prediction of the m-th tree, seeks to minimize the cross-entropy loss objective function

{ L_{BCE}} = -\frac{1}{N} \sum_{i=1}^N \left[Y_i \cdot \log(\hat{p}_i) + (1-Y_i) \cdot \log(1-\hat{p}_i)\right]

where \hat p is the predicted class probability, and Y_i \cdot \log(\hat{p}_i) and (1-Y_i) \cdot \log(1-\hat{p}_i) calculate the error for the positive and negative cases respectively.

The algorithm can be further optimized by tuning various hyper-parameters such as the learning rate, the maximum depth of the decision trees, and the number of iterations. While Gradient Boosting offers a lot more hyperparameter options, in this study only maximum depth was manually restricted in order to prevent overfitting and to reduce the training time. The Gradient Boosting algorithm is known for its ability to handle high-dimensional data and its robustness to outliers and noisy data. However, this algorithm can be computationally intensive and good performance may be dependent on careful hyper-parameter tuning.

Extreme Gradient Boosting (XGBoost)

XGBoost, the third ensemble learning classifier used in this study, is a variant of Gradient Boosting that uses a more advanced implementation of the algorithm and introduces additional regularization techniques to prevent overfitting. Instead of using the the cross-entropy loss function, the XGBoost algorithm uses a more complex combination of gradient descent and second-order optimization techniques to iteratively update the model parameters and reduce the loss function:

{L}_{\text{GBDT}} = -\sum_{i=1}^n \left[ Y_i \ln \sigma(\hat y_{\text{GBDT}}(X_i)) + (1-Y_i) \ln (1-\sigma(\hat y_{\text{GBDT}}(X_i))) \right]

As with the Gradient Boosting algorithm, it allows for a wide variety of regularization techniques and parameters to prevent overfitting and improve the generalization performance. For this study (as with with gradient boosting) only the maximum depth was manually restricted to prevent overfitting and to reduce training times. XGBoost is known for its speed, scalability, and ability to handle large-scale and high-dimensional data. It has been used successfully in many applications such as click-through rate prediction, image classification and text classification, but just like Gradient Boosting it may require extensive hyper-parameter tuning to get the best performance.

Evaluation & Analysis

Loss of misinformation detection performance was measured using the four test sets, and compared across the NLP architectures comprised out of the combinations of six feature extraction methods and seven general purpose classification algorithms (table 1). NLP architectures were kept identical in train and test data sets, and were kept as similar as possible in terms of preprocessing, varying only in the the elements of interest; feature extraction methods and classification algorithms.

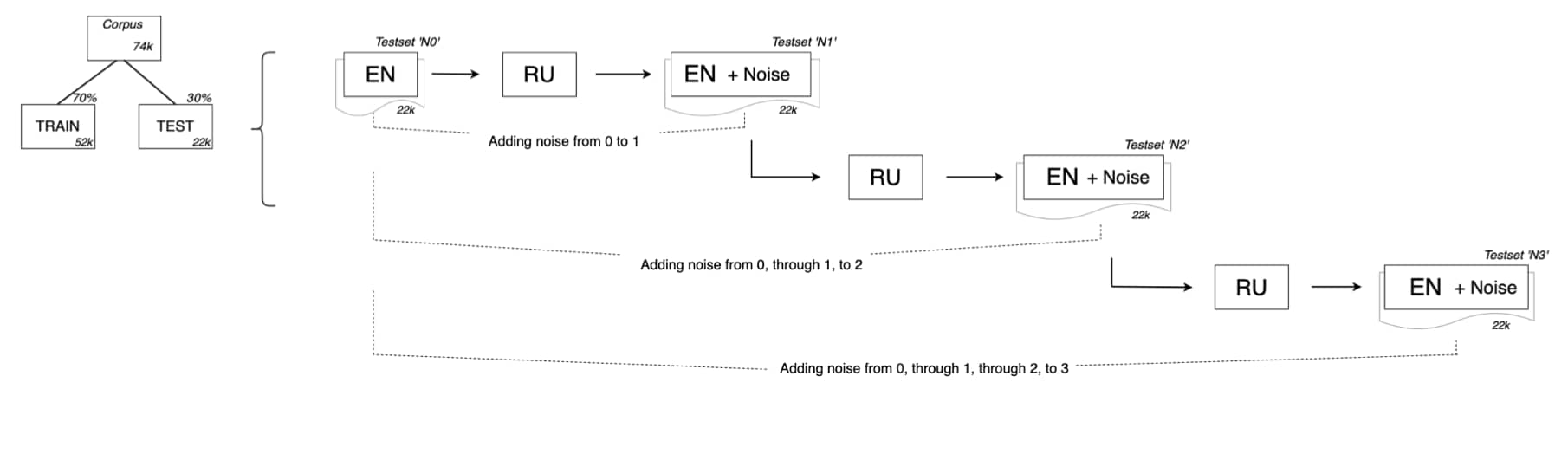

For evaluation the AUC ROC metric was chosen. The AUC ROC (’Area Under the Receiver Operating Characteristic Curve’) measures the performance of the model by calculating the ‘area’ (i.e. the AUC) under the receiver operating characteristic (ROC) curve. This curve is a graphical representation of a model’s performance. It is created by plotting the ‘true positive rate’ (TPR) against the ‘false positive rate’ (FPR) at various classification thresholds. With the TPR defined as TP / (TP + FN), and the FPR defined as FP / (FP + TN).

The area (AUC) is then calculated (using the scikit-learn library) by summing up the trapezoids that make up the ROC curve. This results in a value between 0 and 1, where 1 would mean all of the model’s classifications were correct, and 0.5 would mean no better than chance (given a balanced dataset).

AUC ROC is often preferred over accuracy as an evaluation metric for binary classification tasks because it is more robust to imbalanced datasets, and is commonly considered a more comprehensive evaluation metric, as it takes into account the trade-off between TPR and FPR.

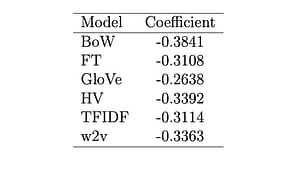

The AUC ROC scores are calculated four times for each NLP architecture, once per test set, where each test is increasingly noisy due to MT induced lexical differences. From these four scores per architecture and the Jaro-Winkler distance an (ordinary least squares) linear regression coefficient is calculated. These regression coefficients, as estimates for the sensitivity of each architecture to MT induced noise, were used throughout the rest of this study.

Figure 3. An illustration taken from de Oliveira et al., 2021, that visualizes the relationship of the TPR and FPR with the AUC ROC for different (fictional) classifiers.

Analysis

Firstly, the AUC ROC scores on the test sets without noise (i.e. without back-translation) where compared to those with one level of back-translation, as were those with one level of back-translation compared to those with two levels and so forth until the third level of back-translation. Here paired two-sample t-tests were used to test whether differences could be regarded as statistically significant.

Secondly, Friedman’s tests were used in this study, for both the feature extraction methods as well as for the classification algorithms, to test for significant differences in sensitivity to MT induced noise. Its test statistic Q was calculated as follows:

Q = \frac{12}{nk(k+1)} \sum_{j=1}^{k} R_j^2 - 3n(k+1)

where k is the number of ‘groups’ (i.e. feature extraction methods), n is the number of measurements (i.e. number of classification algorithms) and vice versa in a second test, and R_j is the sum of the ranks for the j‘th ‘group’.

Finally, after applying Friedman tests for both the feature extraction methods and the classification algorithms, post-hoc test in the form of Nemenyi test were performed to identify which exact feature extraction methods differed from which other, and which exact classification algorithms differed from which in their sensitivity to MT induced noise in the field of disinformation detection. The Nemenyi test is an adaptation of the Tukey HSD test, that is appropriate for this design as it uses rankings, and controls for the family wise error, needed to prevent the false positive rate from increasing when making multiple pairwise comparisons.

Results

Table 2. The mean Jaro-Winkler (and Hamming) distances between the source and the MT back-translations. All distances are in relation to the test N0 source set.

Table 3. Performance scores (AUC ROC) for the 42 NLP architectures used in this study with corresponding regression coefficients (β).

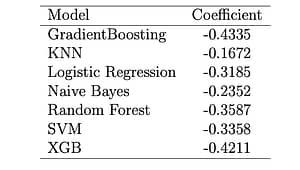

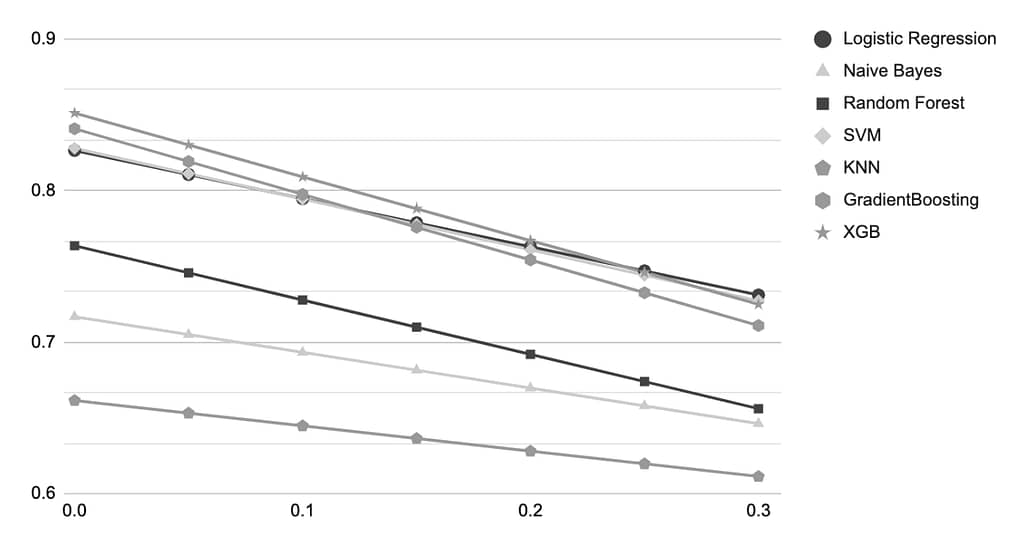

Isolating the performance decrease for the feature extraction methods, likewise for the classification algorithms, results in the following averages listed in table 4 and table 5 respectively:

Table 4. Performance decrease by regression coefficient for the feature extraction methods.

Table 5. Performance decrease by regression coefficient for the classification algorithms.

Figure 4. Linear approximations of the performance per feature extraction method where effects of classification algorithms are fixed.

Figure 5. Linear approximations of the performance per classification algorithm where effects of feature extraction methods are fixed.

Conclusion

This study found that machine translation induced noise negatively impacts the ability to detect disinformation when relying on common NLP architectures. The performance of NLP architectures dropped significantly across the board after one level of back-translation. By looking at 42 different NLP architectures this study found that this was true for every feature extraction method and for every classification algorithm whether in isolation or combined as a full architecture.

Furthermore, it was discovered that the extent to which performances decrease (i.e. ‘MT noise sensitivity’) varied significantly between the feature extraction methods, as well as between the classification algorithms. This means that both the best performing classification algorithm as well the feature extraction method that might currently be implemented, may no longer be the best performing ones as machine translated (and possibly machine generated) texts are becoming more prevalent on platforms.

Combined, these findings are highly valuable, albeit potentially worrying in the context of disinformation spread on social media. As social media platforms are increasingly relying on automated NLP based detection to combat disinformation spread, this study’s findings suggest that the current trend in machine translation services is likely to exacerbate the disinformation problem by slipping through these NLP based detection methods at higher rates.

footnotes

- This variety is therefore reflected in the dataset compiled for this study.

- – Parkinson HJ. Click and elect: how fake news helped Donald Trump win a real election.

– The Guardian. 2016 Nov 14; Solon O. Facebook’s failure: did fake news and polarized politics get trump elected?

– The Guardian. 2016 Nov 10; Cantarella, M., Fraccaroli, N., & Volpe, R. (2019).

– Does Fake News Affect Voting Behaviour? PSN: Political Behavior (Topic). - This ‘trend’ has been reported on extensively by traditional media;

nbcnews, nbcnews, techcrunch, reuters, forbes, - While the potential for disinformation of this expanded capability may be obvious, this study focusses on machine translation.

- Government regulation aimed at holding social media platforms accountable for the spread of misinformation on their platforms is a complicated proposal. At least partly because strong regulation would likely be disproportionately costly for smaller platforms (such as reddit and twitter) and would likely unintentionally benefit the competitive advantage of the already dominant players such as Meta.

- PolitiFact is an American nonprofit project operated by the Poynter Institute in St. Petersburg, Florida, with offices there and in Washington, D.C. PolitiFact.com is a fact-checking website that rates the accuracy of claims by elected officials and others.

- ISOT is the Information Security and Object Technology Research Lab of the engineering department of the University of Victoria (Canada).

- EUvsDisinfo is a project of the European External Action Service’s East StratCom Task Force. It was established in 2015 to better forecast, address, and respond to the Russian Federation’s ongoing disinformation campaigns affecting the European Union, its Member States, and countries in the shared neighbourhood.

- Snopes, formerly known as the Urban Legends Reference Pages, is a fact-checking website. It has been described as a “well-regarded reference for sorting out myths and rumors” on the Internet. It is owned by Chris Richmond and Drew Schoentrup.

- All data sets, including the compiled data set, can also be found on the author’s github at https://github.com/StevenPeutz/Disinformation-NLP.

- Googletrans is a free python library that implements Google Translate API (https://pypi.org/project/googletrans/).

- The Google translate documentation states a higher character limit, however experience showed that increasing above 2800 characters resulted in significant increases of ‘character limit exceeded’ failures.

- The scaling factor defaults (and was not altered in this study) at 0.1.

- Conventionally the input for feature extraction methods (for the task of text classification) involves thorough preprocessing. In this study preprocessing was kept to a minimum to avoid removing potential stylistic difference between disinformation and normal texts. Based on this consideration the preprocessing step of stop-word removal was omitted (but e.g. stemming was not).

- CBOW trained word2vec is thought to perform better for classification of news articles, whereas skip-gram trained word2vec performs better on shorter tweet format text classification (Jang et al., 2019).

- The input layer of the model consists of one-hot encoded vectors for each of the surrounding words in the window. The output layer consists of a softmax layer over the vocabulary, which produces a probability distribution over all the words in the vocabulary. The weights of the model are learned by minimizing the negative log-likelihood of the training data. Specifically, the CBOW Word2Vec model uses the following objective function: J_\theta = \frac{1}{T}\sum^{T}_{t=1}\log{p}\left(w_{t}\mid{w}_{t-n},\ldots,w_{t-1}, w_{t+1},\ldots,w_{t+n}\right)

- This reduction was performed in order to meet memory size requirements as well as to maintain equal dimension sizes for all pretrained embeddings methods.

- Though the r language/software were not used in this project, the explanation was sourced from its documentation here https://cran.r-project.org/web/packages/text2vec/vignettes/glove.html.

- see also: Mikolov, T., Grave, E., Bojanowski, P., Puhrsch, C., & Joulin, A. (2017). Advances in pre-training distributed word representations. arXiv preprint arXiv:1712.09405.

- See Appendix C for a brief description of L1 and L2 regularization.

- Though there are versions that use hard-margin classifiers, in this study soft-margin classifier was used since a sufficiently small value for {\lambda} also results in the hard-margin classifier for linearly classifiable input data. This “soft margin” implementation is also more common in software packages. More information can be found in: Cortes, C., & Vapnik, V.N. (2004). Support-vector networks. Machine Learning, 20, 273-297.

- The ‘Curse of Dimensionality’ as explained in Rokach (2016): “If the data splits approximately equally on every split, then a univariate decision tree cannot test more than O(log n) features (where n is the dataset size). This puts decision trees at a disadvantage for tasks with many relevant features”. Rokach, L. (2016). Decision forest: Twenty years of research. Information Fusion, 27, 111-125.

- The NLP architectures were identical in train and test data sets, and were kept as similar as possible in terms of preprocessing, varying only in the the elements of interest; feature extraction methods and classification algorithms.

- Here when including the kNN algorithm (both with and without replacement by mean correction) in the Nemenyi post-hoc tests, kNN was also found to be significantly less sensitive to MT noise compared to both Gradient Boosting and XGB. However, these results are excludes due to the poor base performance of the kNN algorithm.

- It is important tot note that while character-based embeddings were not focussed on in this study, they were not completely unaccounted as FastText does learn character based embedding prior to summing them into word based embeddings.

references

- Allcott, H., & Gentzkow, M. (2017). Social media and fake news in the 2016 election. Journal of economic perspectives, 31 (2), 211-236.

- Banaji, S., Bhat, R., Agarwal, A., Passanha, N., & Sadhana Pravin, M. (2019). WhatsApp vigilantes: An exploration of citizen reception and circulation of WhatsApp misinformation linked to mob violence in India.

- Barrón-Cedeno, A., Jaradat, I., Da San Martino, G., & Nakov, P. (2019). Proppy: Organizing the news based on their propagandistic content. Information Processing & Management, 56 (5), 1849-1864.

- Bavel, J. J. V., Baicker, K., Boggio, P. S., Capraro, V., Cichocka, A., Cikara, M., & Willer, R. (2020). Using social and behavioural science to support COVID-19 pandemic response. Nature human behaviour, 4 (5), 460-471.

- Born, K. & Edgington, N., 2017. Analysis of Philanthropic Opportunities to Mitigate the Disinformation/Propaganda Problem, William and Flora Hewlett Foundation. Belgium. Retrieved from (https://policycommons.net/artifacts/1847302/analysis-of-philanthropic-opportunities-to-mitigate-the-disinformationpropaganda-problem/2593610/) on 24 Mar 2023. CID: 20.500.12592/481vs6.

- Centola, D. (2010). The spread of behavior in an online social network experiment. science, 329 (5996), 1194-1197.

- Cosentino, G. (2020). Tribal Politics: The Disruptive Effects of Social Media in the Global South. In: Social Media and the Post-Truth World Order. Palgrave Pivot, Cham. (https://doi.org/10.1007/978-3-030-43005-4_5)

- Cortes, C., & Vapnik, V. (1995). Support-vector networks. Machine learning, 20, 273-297.

- Ennab, F., Nawaz, F. A., Narain, K., Nchasi, G., Essar, M. Y., Head, M. G., … & Shen, B. (2022). Monkeypox outbreaks in 2022: battling another “pandemic” of misinformation. International Journal of Public Health, 67, 1605149.

- European Commission, Joint Research Centre, Bruns, H., Dessart, F., Pantazi, M. (2022). Covid-19 misinformation : preparing for future crises : an overview of the early behavioural sciences literature, Publications Office of the European Union. (https://data.europa.eu/doi/10.2760/41905)

- Freitas, A., & Trench, B. (2020). Disinformation in Brazil’s 2018 election: Evidence from a WhatsApp study. Journal of International Affairs, 73(1), 113-127.

- Guess, A. M., & Lyons, B. A. (2020). Misinformation, disinformation, and online propaganda. Social media and democracy: The state of the field, prospects for reform, 10.

- Jiang, L., Cai, Z., Wang, D., & Jiang, S. (2007, August). Survey of improving k-nearest-neighbor for classification. In Fourth international conference on fuzzy systems and knowledge discovery (FSKD 2007) (Vol. 1, pp. 679-683). IEEE.

- Kaplan, A. M. (2015). Social media, the digital revolution, and the business of media. International Journal on Media Management , 17 (4), 197-199.

- KhudaBukhsh, A. R., Sarkar, R., Kamlet, M. S., & Mitchell, T. (2021, May). We Don’t Speak the Same Language: Interpreting Polarization through Machine Translation. In Proceedings of the AAAI Conference on Artificial Intelligence (Vol. 35, No. 17, pp. 14893-14901). Chicago.

- Kreiss, D., & McGregor, S. C. (2018). Technology firms, government and the far right in the Brexit referendum. Journal of European Public Policy, 25(8), 1191-1209.

- Lai, S., Liu, K., He, S., & Zhao, J. (2016). How to generate a good word embedding. IEEE Intelligent Systems, 31 (6), 5-14.

- Lazer, D. M., Baum, M. A., Benkler, Y., Berinsky, A. J., Greenhill, K. M., Menczer, F., Metzger, M. J., Nyhan, B., Pennycook, G., Rothschild, D., Schudson, M., Sloman, S. A., & Sunstein, C. R. (2018). The science of fake news. Science, 359(6380), 1094-1096. (https://doi.org/10.1126/science.aao2998)

- Lee, S. K., Sun, J., Jang, S., & Connelly, S. (2022). Misinformation of COVID-19 vaccines and vaccine hesitancy. Scientific reports, 12 (1), 13681. https://doi.org/10.1038/s41598-022-17430-6

- Lewandowsky, S., Ecker, U. K., Seifert, C. M., Schwarz, N., & Cook, J. (2012). Misinformation and Its Correction: Continued Influence and Successful Debiasing. Psychological science in the public interest : a journal of the American Psychological Society, 13 (3), 106–131. (https://doi.org/10.1177/1529100612451018)

- Liu, Y., Wang, Y., Zhang, J. (2012). New Machine Learning Algorithm: Random Forest. In: Liu, B., Ma, M., Chang, J. (eds) Information Computing and Applications. ICICA 2012. Lecture Notes in Computer Science, vol 7473. Springer, Berlin, Heidelberg. (https://doi.org/10.1007/978-3-642-34062-8_32)

- Manning, C. D. (2009). An introduction to information retrieval. Cambridge university press.

- Meyer, D., Leisch, F., & Hornik, K. (2003). The support vector machine under test. Neurocomputing, 55 (1-2), 169-186.

- Mikolov, T., Chen, K., Corrado, G., & Dean, J. (2013). Efficient estimation of word representations in vector space. arXiv preprint arXiv:1301.3781.

- Mikolov, T., Grave, E., Bojanowski, P., Puhrsch, C., & Joulin, A. (2017). Advances in pre-training distributed word representations. arXiv preprint arXiv:1712.09405.

- Ng, L. H. X., Cruickshank, I. J., & Carley, K. M. (2022). Cross-platform information spread during the January 6th capitol riots. Social Network Analysis and Mining, 12(1), 133. (https://doi.org/10.1007/s13278-022-00937-1)

- de Oliveira, N. R., Pisa, P. S., Lopez, M. A., de Medeiros, D. S. V., & Mattos, D. M. F. (2021). Identifying Fake News on Social Networks Based on Natural Language Processing: Trends and Challenges. Information, 12 (1), 38. MDPI AG. Retrieved from http://dx.doi.org/10.3390/info12010038

- Pennington, J., Socher, R., & Manning, C. D. (2014, October). Glove: Global vectors for word representation. In Proceedings of the 2014 conference on empirical methods in natural language processing (EMNLP) (pp. 1532-1543).

- Rokach, L., & Maimon, O. (2005). Decision trees. Data mining and knowledge discovery handbook, 165-192.

- Su, Y. (2021). It doesn’t take a village to fall for misinformation: Social media use, discussion heterogeneity preference, worry of the virus, faith in scientists, and COVID-19-related misinformation beliefs. Telematics and Informatics, 58, 101547.

- Shu, K., Sliva, A., Wang, S., Tang, J., & Liu, H. (2019). Fake News Detection on Social Media: A Data Mining Perspective. ACM SIGKDD Explorations Newsletter, 20(1), 22-36. (https://doi.org/10.48550/arXiv.1708.01967)

- Tandoc Jr, E. C. (2019). The facts of fake news: A research review. Sociology Compass, 13 (9), e12724.

- Tandoc Jr., Lim, Z. W., & Ling, R. (2018). Defining “Fake News”, Digital Journalism, 6:2, 137- 153, DOI: (https://doi.org/10.1080/21670811.2017.1360143)

- Tucker, J. A., Guess, A., Barberá, P., Vaccari, C., Siegel, A., Sanovich, S., & Nyhan, B. (2018). Social media, political polarization, and political disinformation: A review of the scientific literature. Political polarization, and political disinformation: a review of the scientific literature (March 19, 2018).

- Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., & Polosukhin, I. (2017). Attention is all you need. Advances in neural information processing systems, 30.

- Vieira, L. N., O’Hagan, M., & O’Sullivan, C. (2021). Understanding the societal impacts of machine translation: a critical review of the literature on medical and legal use cases. Information, Communication & Society, 24 (11), 1515-1532.

- Wang, Y., Qin, J., & Wang, W. (2017, October). Efficient approximate entity matching using jaro-winkler distance. In Web Information Systems Engineering–WISE 2017: 18th International Conference, Puschino, Russia, October 7-11, 2017, Proceedings, Part I (pp. 231-239). Cham: Springer International Publishing.

- Wardle, C. (2017). Fake news. It’s complicated. First draft, 16, 1-11.

- Winkler W. E. (1990). String Comparator Metrics and Enhanced Decision Rules in the Fellegi-Sunter Model of Record Linkage, Proceedings of the Section on Survey Research Methods, American Statistical Association, 354–359.

- Xiao, X., Borah, P., & Su, Y. (2021). The dangers of blind trust: Examining the interplay among social media news use, misinformation identification, and news trust on conspiracy beliefs. Public Understanding of Science, 30 (8), 977-992.

I’m always happy to talk about such interesting topics as this, so feel free to contact me for any feedback or questions!